DataX写HIVE踩坑 #

mysql写入hive,hive开启了Kerbero认证

DataX HdfsWriter 插件文档https://github.com/alibaba/DataX/blob/master/hdfswriter/doc/hdfswriter.md

参数获取方法说明:

defaultFS:/alidata2/server/hadoop-3.3.5/etc/hadoop/core-site.xml

1<property>

2 <name>fs.defaultFS</name>

3 <value>hdfs://node-a4:9000</value>

4</property>

path:

要查看 Hive 中设置的数据仓库的存储路径,您可以查询 Hive 的配置参数 hive.metastore.warehouse.dir。您可以通过在 Hive CLI 或者 Beeline 中执行以下命令来查看此配置参数的值:

1SET hive.metastore.warehouse.dir;

这将显示 Hive 数据仓库的当前存储路径。请注意,这个路径通常位于 HDFS 中,例如:hive.metastore.warehouse.dir=/gaohaifeng/hive/warehouse。

如果您想在 Hadoop HDFS 中查看这个路径下的文件和目录,可以使用 hadoop fs -ls 命令,例如:

1hadoop fs -ls /gaohaifeng/hive/warehouse

请将 /gaohaifeng/hive/warehouse 替换为您实际的数据仓库路径。

最终得到path:/gaohaifeng/hive/warehouse/ + 表名

writeMode:

- append,写入前不做任何处理,DataX hdfswriter直接使用filename写入,并保证文件名不冲突。

- nonConflict,如果目录下有fileName前缀的文件,直接报错。

- truncate,如果目录下有fileName前缀的文件,先删除后写入。

fieldDelimiter:通过建表语句查询表详情

1hive> SHOW CREATE TABLE my_table;

212 rows selected (0.174 seconds)

0: jdbc:hive2://node-a5:10000/default> show create table school_info;

INFO : Compiling command(queryId=root_20240106115704_ce3f591e-977d-4b1d-9716-93ef1eadb9fd): show create table school_info

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Semantic Analysis Completed (retrial = false)

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:createtab_stmt, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=root_20240106115704_ce3f591e-977d-4b1d-9716-93ef1eadb9fd); Time taken: 0.032 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=root_20240106115704_ce3f591e-977d-4b1d-9716-93ef1eadb9fd): show create table school_info

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=root_20240106115704_ce3f591e-977d-4b1d-9716-93ef1eadb9fd); Time taken: 0.098 seconds

INFO : OK

INFO : Concurrency mode is disabled, not creating a lock manager

+----------------------------------------------------+

| createtab_stmt |

+----------------------------------------------------+

| CREATE TABLE `school_info`( |

| `id` bigint, |

| `school_id` bigint, |

| `school_name` string, |

| `full_pinyin` string, |

| `head_pinyin` string, |

| `school_code` string, |

| `province` string, |

| `city` string, |

| `area` string, |

| `address` string, |

| `parent_org_id` bigint, |

| `period` string, |

| `period_name` string, |

| `education_type` string, |

| `education_type_name` string, |

| `telephone` string, |

| `curr_cpscode` int, |

| `agent_id` int, |

| `agent_name` string, |

| `num_student` int, |

| `num_teacher` string, |

| `num_class` string, |

| `is_batch_upgrade` tinyint, |

| `note` string, |

| `status` tinyint, |

| `time_create` int, |

| `time_modified` int, |

| `creator_app` bigint) |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe' |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.mapred.TextInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' |

| LOCATION |

| 'hdfs://node-a4:9000/gaohaifeng/hive/warehouse/school_info' |

| TBLPROPERTIES ( |

| 'bucketing_version'='2', |

| 'transient_lastDdlTime'='1704178556') |

+----------------------------------------------------+

40 rows selected (0.152 seconds)

从您提供的 `SHOW CREATE TABLE` 输出中,我们可以看到这个表使用的是 `LazySimpleSerDe`。但是,表的创建语句中没有明确指定分隔符。在这种情况下,Hive 会使用默认的分隔符。

对于 `LazySimpleSerDe`,默认的字段分隔符是 ASCII 码值为 1 的字符(`\001`),默认的集合分隔符是 ASCII 码值为 2 的字符(`\002`),默认的映射键分隔符是 ASCII 码值为 3 的字符(`\003`)。

所以,这张表的默认字段分隔符是 `\001`。

最终fieldDelimiter:“\1”

解决方案 #

1、建表分隔符和导入时的分隔符不一致 #

1.修改建表分隔符 #

1alter table ds.ods_user_info_dd set serdeproperties('field.delim'='\t');

复制

2.建表时直接指定好分隔符 #

1create table table_name(` `a bigint,` `b string` `)` `comment 'xx表' ``partitioned by (`ds` string) ``row format delimited fields terminated by '\t';

复制

3.针对分区表和无分区表的区别 #

最终配置:

1{

2

3 "job": {

4

5 "setting": {

6

7 "speed": {

8

9 "channel": 3

10

11 },

12

13 "errorLimit": {

14

15 "record": 0,

16

17 "percentage": 0.02

18

19 }

20

21 },

22

23 "content": [

24

25 {

26

27 "reader": {

28

29 "name": "mysqlreader",

30

31 "parameter": {

32

33 "username": "root",

34

35 "password": "****",

36

37 "column": [

38

39 "id",

40

41 "school_id",

42

43 "school_name",

44

45 "full_pinyin",

46

47 "head_pinyin",

48

49 "school_code",

50

51 "province",

52

53 "city",

54

55 "area",

56

57 "address",

58

59 "parent_org_id",

60

61 "period",

62

63 "period_name",

64

65 "education_type",

66

67 "education_type_name",

68

69 "telephone",

70

71 "curr_cpscode",

72

73 "agent_id",

74

75 "agent_name",

76

77 "num_student",

78

79 "num_teacher",

80

81 "num_class",

82

83 "is_batch_upgrade",

84

85 "note",

86

87 "status",

88

89 "time_create",

90

91 "time_modified",

92

93 "creator_app"

94

95 ],

96

97 "table": [

98

99 "school_info"

100

101 ],

102

103 "connection": [

104

105 {

106

107 "table": [

108

109 "school_info"

110

111 ],

112

113 "jdbcUrl": [

114

115 "jdbc:mysql://server:port/cloud_school?allowMultiQueries=true&useUnicode=true&characterEncoding=UTF-8&serverTimezone=GMT%2B8&useSSL=false"

116

117 ]

118

119 }

120

121 ]

122

123 }

124

125 },

126

127 "writer": {

128

129 "name": "hdfswriter",

130

131 "parameter": {

132

133 "defaultFS": "hdfs://node-a4:9000",

134

135 "fileType": "text",

136

137 "path": "/gaohaifeng/hive/warehouse/school_info",

138

139 "fileName": "school_info",

140

141 "writeMode": "truncate",

142

143 "fieldDelimiter": "\1",

144

145 "column": [

146

147 {

148

149 "name": "id",

150

151 "type": "BIGINT"

152

153 },

154

155 {

156

157 "name": "school_id",

158

159 "type": "BIGINT"

160

161 },

162

163 {

164

165 "name": "school_name",

166

167 "type": "STRING"

168

169 },

170

171 {

172

173 "name": "full_pinyin",

174

175 "type": "STRING"

176

177 },

178

179 {

180

181 "name": "head_pinyin",

182

183 "type": "STRING"

184

185 },

186

187 {

188

189 "name": "school_code",

190

191 "type": "STRING"

192

193 },

194

195 {

196

197 "name": "province",

198

199 "type": "STRING"

200

201 },

202

203 {

204

205 "name": "city",

206

207 "type": "STRING"

208

209 },

210

211 {

212

213 "name": "area",

214

215 "type": "STRING"

216

217 },

218

219 {

220

221 "name": "address",

222

223 "type": "STRING"

224

225 },

226

227 {

228

229 "name": "parent_org_id",

230

231 "type": "BIGINT"

232

233 },

234

235 {

236

237 "name": "period",

238

239 "type": "STRING"

240

241 },

242

243 {

244

245 "name": "period_name",

246

247 "type": "STRING"

248

249 },

250

251 {

252

253 "name": "education_type",

254

255 "type": "STRING"

256

257 },

258

259 {

260

261 "name": "education_type_name",

262

263 "type": "STRING"

264

265 },

266

267 {

268

269 "name": "telephone",

270

271 "type": "STRING"

272

273 },

274

275 {

276

277 "name": "curr_cpscode",

278

279 "type": "INT"

280

281 },

282

283 {

284

285 "name": "agent_id",

286

287 "type": "INT"

288

289 },

290

291 {

292

293 "name": "agent_name",

294

295 "type": "STRING"

296

297 },

298

299 {

300

301 "name": "num_student",

302

303 "type": "INT"

304

305 },

306

307 {

308

309 "name": "num_teacher",

310

311 "type": "STRING"

312

313 },

314

315 {

316

317 "name": "num_class",

318

319 "type": "STRING"

320

321 },

322

323 {

324

325 "name": "is_batch_upgrade",

326

327 "type": "TINYINT"

328

329 },

330

331 {

332

333 "name": "note",

334

335 "type": "STRING"

336

337 },

338

339 {

340

341 "name": "status",

342

343 "type": "TINYINT"

344

345 },

346

347 {

348

349 "name": "time_create",

350

351 "type": "INT"

352

353 },

354

355 {

356

357 "name": "time_modified",

358

359 "type": "INT"

360

361 },

362

363 {

364

365 "name": "creator_app",

366

367 "type": "BIGINT"

368

369 }

370

371 ],

372

373 "haveKerberos": true,

374

375 "kerberosPrincipal": "kylin/node-a4@cp.com",

376

377 "kerberosKeytabFilePath": "/alidata2/kerberos/f125ce826a764258aa7f3781f813b1e7/kylin.keytab"

378

379 }

380

381 }

382

383 }

384

385 ]

386

387 }

388

389}

【踩坑实录】datax从pg同步数据到hive数据全为null问题https://cloud.tencent.com/developer/article/2161115

DataX Hdfs HA(高可用)配置支持https://blog.csdn.net/qq_45688530/article/details/127239141

如果开启了HA,则hive中无论如何都无法执行成功,因为会一直报错:

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer createBlockOutputStream

信息: Exception in createBlockOutputStream

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:197)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at org.apache.hadoop.net.SocketInputStream$Reader.performIO(SocketInputStream.java:57)

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:142)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2278)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1318)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1237)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:449)

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer nextBlockOutputStream

信息: Abandoning BP-845261063-10.2.1.24-1684142186278:blk_1073801732_61005

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer nextBlockOutputStream

信息: Excluding datanode DatanodeInfoWithStorage[10.3.1.23:9866,DS-c93861b8-deef-45d0-ad25-959822842fa7,DISK]

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer createBlockOutputStream

信息: Exception in createBlockOutputStream

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:197)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at org.apache.hadoop.net.SocketInputStream$Reader.performIO(SocketInputStream.java:57)

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:142)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2278)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1318)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1237)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:449)

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer nextBlockOutputStream

信息: Abandoning BP-845261063-10.2.1.24-1684142186278:blk_1073801733_61006

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer nextBlockOutputStream

信息: Excluding datanode DatanodeInfoWithStorage[10.3.1.21:9866,DS-6d1b55cf-62a9-4a87-b64b-220dfc9486e7,DISK]

一月 06, 2024 11:06:34 上午 org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer createBlockOutputStream

信息: Exception in createBlockOutputStream

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:197)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at org.apache.hadoop.net.SocketInputStream$Reader.performIO(SocketInputStream.java:57)

at org.apache.hadoop.net.SocketIOWithTimeout.doIO(SocketIOWithTimeout.java:142)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:161)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:131)

at org.apache.hadoop.net.SocketInputStream.read(SocketInputStream.java:118)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at java.io.FilterInputStream.read(FilterInputStream.java:83)

at org.apache.hadoop.hdfs.protocolPB.PBHelper.vintPrefixed(PBHelper.java:2278)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.createBlockOutputStream(DFSOutputStream.java:1318)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.nextBlockOutputStream(DFSOutputStream.java:1237)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:449)

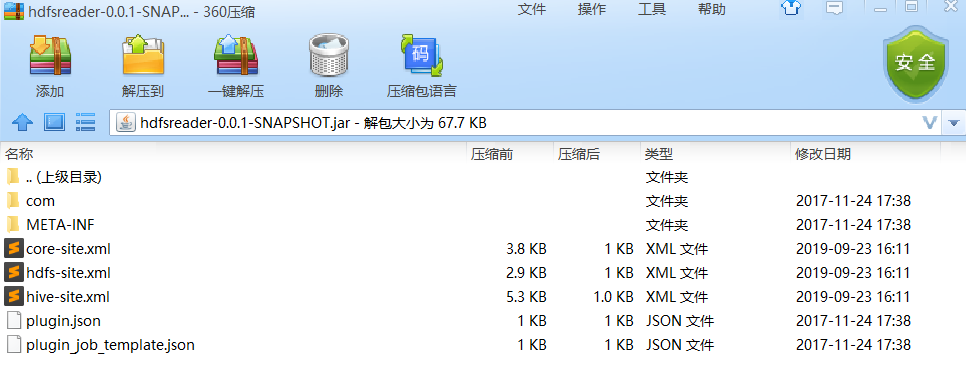

此时则需要重构datax的hdfswriter插件,即 /datax/plugin/writer/hdfswriter/hdfswriter-0.0.1-SNAPSHOT.jar

将hdfs-site.xml hive-site.xml core-site.xml 文件压缩进jar中并替换

使用此方法配置后,datax json中连hadoopCofig参数都不需要配置了